This article is also available in:

French

Back in december, we wrote about an online demonstration against AI generated images on ArtStation. In a nutshell, one of the main reasons of this anger is the fact that tools that use AI to generate pictures, such as Midjourney and Stablediffusion, are often trained using images gathered without the constent of the artists who created them. Which also means that these artists were not paid when their work was used.

AI & NVIDIA

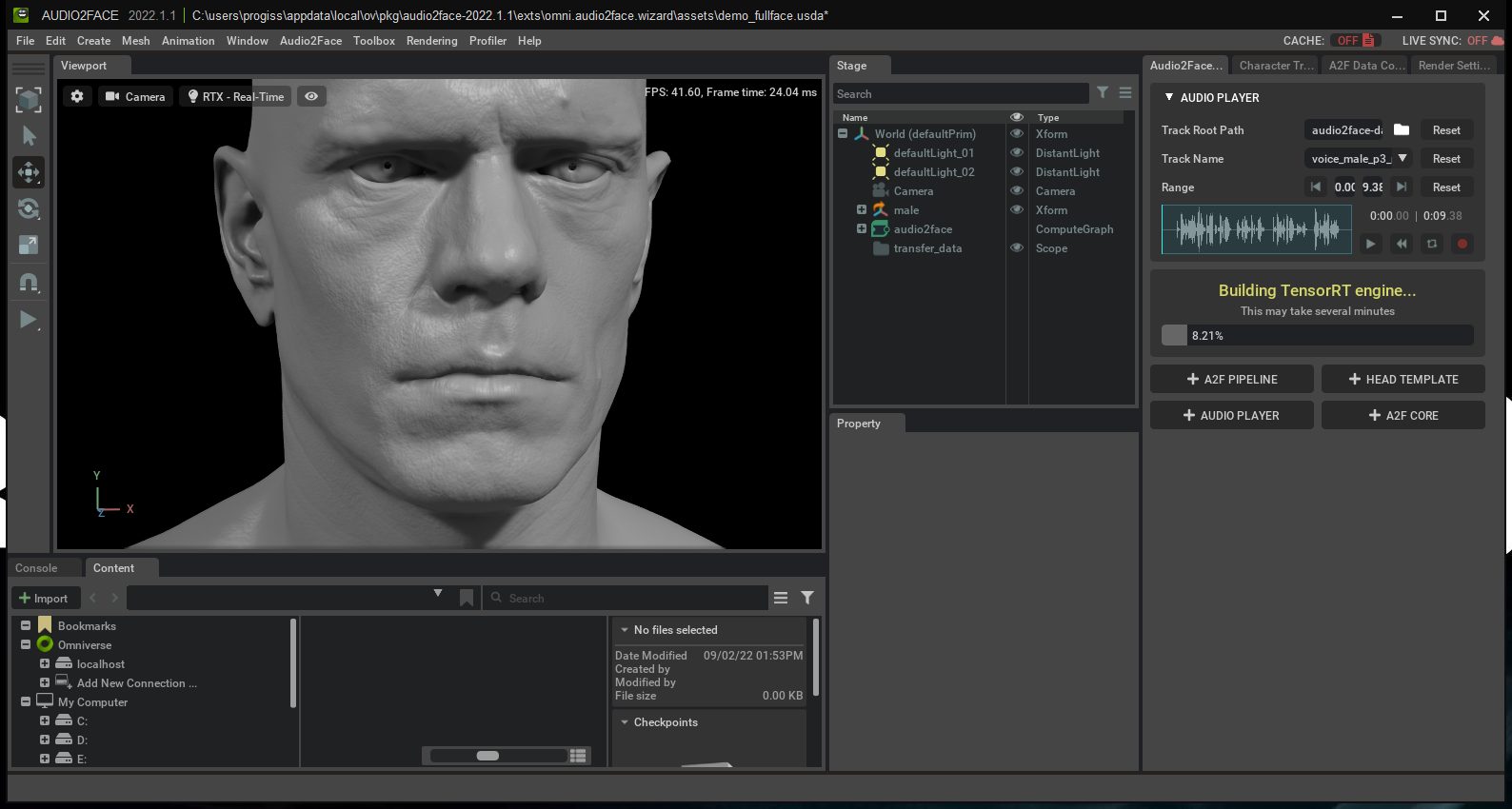

Meanwhile, NVIDIA provides some generative AI tools within Omniverse, its digital creation and collaboration platform.. These tools include Audio2Face, which can generate facial animation for a 3D character from an audio clip, and Canvas, which allows artists to turn very rough sketches into high resolution environment maps. Overall, NVIDIA has been working on several impressive projects relying on AI, and the company is quite obviously pushing in this direction.

This obviously raises a few questions: how are NVIDIA AI tools trained, and what is their policy when it comes to datasets?

Since NVIDIA organized a press briefing yesterday about their latest Omniverse announcements, we took this opportunity to ask them about this issue.

Yes, NVIDIA will protect Artists’ rights

In a nutshell, NVIDIA explained that they took this question very seriously. They want to “ensure that only things that are licensable are being used”. NVIDIA added that this is going to be an ongoing effort, but that this is something they will keep focus on “to ensure the artists’ rights are always adhered to”.

NVIDIA also gave us an example of their work when it comes to what is created thanks to AI tools. They are part of the SemaFor program headed by DARPA (Defense Advanced Research Projects Agency). The goal of the SemaFor program is to develop “technologies that are capable of automating the detection, attribution, and characterization of falsified media assets”, for example deepfakes created using NVIDIA’s StyleGAN3.

How do other companies tackle this issue?

This topic was also discussed at the end of 2022 during RADI-RAF, an event which takes place every year in Angoulême (a city located in southwestern France) and where many French animation studios and digital arts schools discuss industry trends.

We filmed the conference in which this topic was discussed : it is available below as well as on our Youtube channel. (the conference is in French)

Kinetix, a French startup company focused on creating animations & emotes that can be used in virtual worlds, relies on AI to create animation from videos. They think that we can probably expect new laws regarding these issues. At the moment, though, Kinetix thinks that multiple legal issues are not settled: for example, who owns the copyright of an artwork created using an AI trained on copyrighted material, without the knowledge of the copyright owners?

Henri Mirande (CTO – Kinetix) explained that Kinetix chose to avoid this issue alltogether. To train their tools, they either bought the rights of datasets or used their own datasets. As Henri Mirande explains, this approach will help Kinetix avoid any legal issue in the future, whereas a company that would used data scrapped online without checking where this data comes from might face legal battles.

Golaem, a French company that develops artist-friendly crowd, layout, and previsualization tools., also attended the conference. They have another interesting approach when it comes to datasets. Basically, Golaem CEO Stéphane Donikian showcased upcoming improvements fro their crowd simulation tools: they want to use AI to help with the rigging process of their crowds. To train these tools, they want to provide a framework to animation and VFX studios: these studios will then use data created by their own artists on the current project to train the AI, and this dataset won’t be shared with other studios. Many big studios will probably find this approach quite reassuring.

These answers show that many companies, whether they are startups or huge companies like NVIDIA, are aware of the issues raised by AI. Hopefully, the industry will move beyond practices that are considered predatory by a huge number of artists, and more companies will openly share their policy regarding AI and datasets.